Strategic Development

PickNik’s mission is to reduce development time and costs for your robotic applications. We write robotics software with you or for you.

Interacting with a dynamic and uncertain world requires taking in information and making sense of it. Perception makes it possible for a robot to:

- Find and pick up objects of interest

- Avoid collisions in dynamic settings

- Map out and move around its environment

- Respond to moving objects

Spatial AI

Computer vision has been revolutionized by deep learning, and the cost of training a neural network can be mitigated by retraining existing networks and exploiting simulation to generate training data. To take advantage of these advances in a robotic application, however, the information extracted from the pixels has to be projected into the real, 3-D world. Spatial AI provides 3-dimensional situational awareness from raw sensor data. Combining machine learning with the geometry of image formation, or applying AI directly to 3-D point cloud data, enables a robot to move safely and effectively in a dynamic environment.

Which sensor should I use?

Every perception system starts with a sensor. At PickNik we have experience with:

- Depth cameras: Increasingly ubiquitous in robotics, an RGB-D camera provides a color image along with the 3-D structure of the scene.

- Lidar: Offering high accuracy and long range, lidar is a leading sensor for autonomous vehicle applications, but smaller (and less expensive) flash lidars have been developed that are excellent for manipulation applications.

- IMU: An inertial measurement unit is a package of accelerometers, gyroscopes, and often a magnetometer/compass as well. It functions as the robot’s inner ear, sensing movement without any extrinsic signals or input.

- Sonar: Sonar provides 3-D perception in underwater environments, where cameras are hampered by absorption and refraction.

- Force/torque sensor: Especially useful for tasks like wiping a surface, an arm equipped with a force/torque sensor can sense how much force it is exerting on another object.

- Encoders: Used to determine how many times a wheel has turned or the angle of a robotic arm joint, encoders are simple and common sensors in many robotic applications.

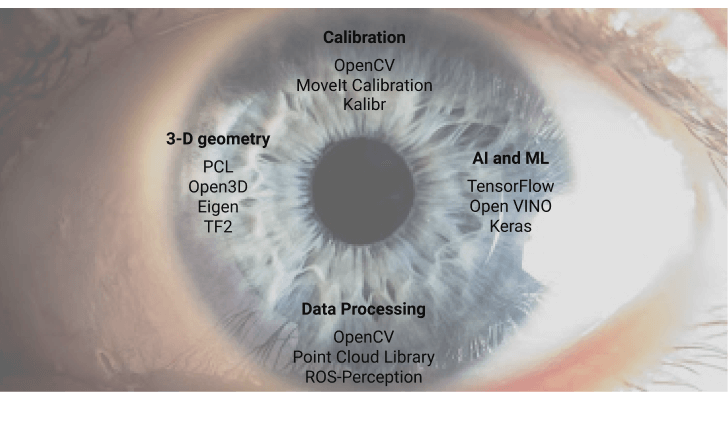

Computer Vision and Open Source

Perception researchers and the open source community have developed a myriad of tools to accelerate the development of a specialized system. If a robot needs to identify which fruit is ripe for picking or determine which kitchen surfaces to clean there is an open source tool that can be adapted to the challenge.

Robotics Perception Consulting

PickNik offers expertise in many areas of computer vision:

- Object recognition, localization, and tracking

- Visual servoing and spatially aware manipulation

- Sensor calibration and hand-eye coordination

- 3-D reconstruction

- Semantic scene understanding

- AI and machine learning