Real-Time Programming: Priority Inversion

Many robotics applications have real-time requirements, which means that they must be able to execute sections of code within a strict deadline. A control loop is one such example - failure to complete the control loop in time may cause controller instability or trigger protective stops! For ROS applications, ros2_control is a framework that can be used for real-time control of robots using ROS 2. Whether writing a real-time application with ros2_control, or your own custom real-time application, real-time code requires a specific set of concerns around ensuring latency is controlled and deadlines are met.

In this blog post, we’re going to discuss priority inversion, which is a potential source of unbounded latency that needs to be avoided in your real-time code. Priority inversion is very much a concern for robotics: a famous example is that priority inversion caused a failure on the Mars Pathfinder!

The ros2_control architecture has a real-time thread that handles robot updates, and a non-real-time thread for the ROS executor. These threads pass data to each other using shared memory. Having a high-priority real-time thread that shares data with low-priority non-real-time threads is a common practice for real-time application structure.

When sharing data between multiple threads, you have to consider synchronization. Reading and writing the same variable from two threads without synchronization can cause memory corruption due to undefined behavior. A mutex can be used to guard access to shared variables and prevent these types of issues. This is fine in a non-real-time application, but for real-time applications we must ensure that our code does not introduce impermissible latency. In this case, it’s important to know that using a std::mutex to guard access to shared variables can cause unbounded latency due to priority inversion.

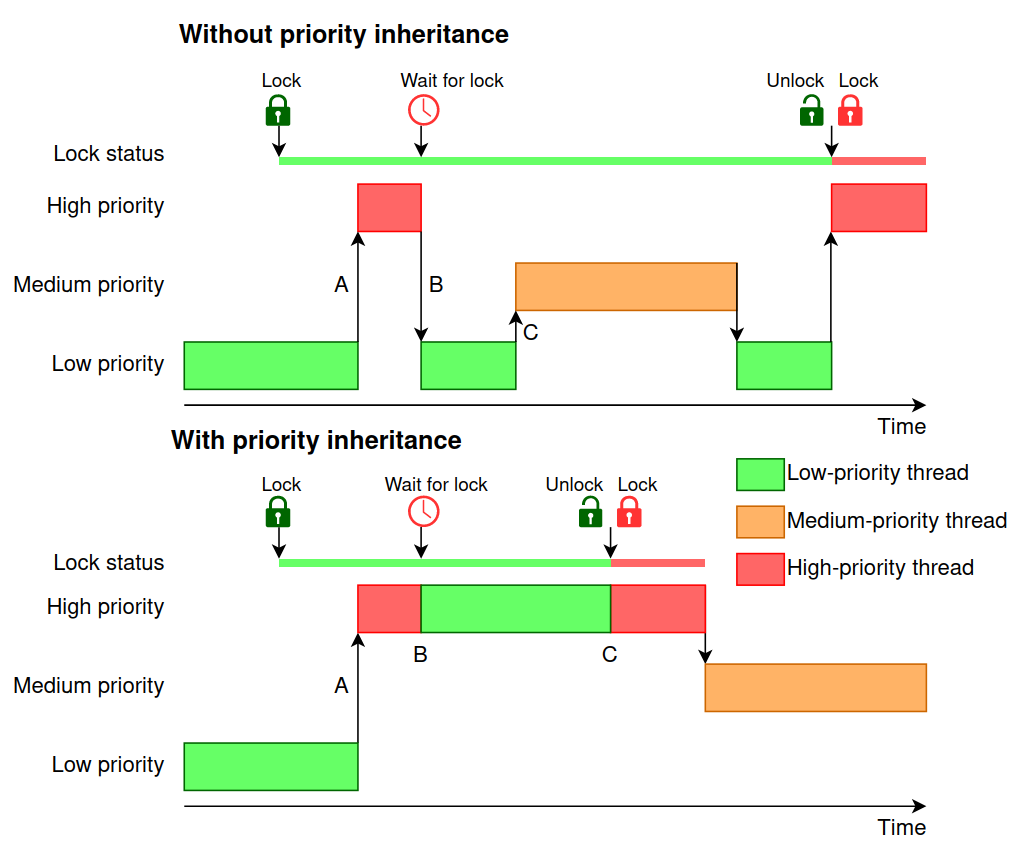

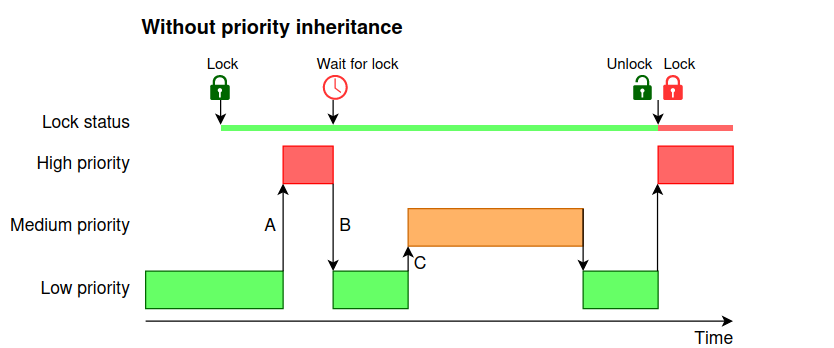

Priority inversion is a situation that can occur when a low priority thread holds a lock required by a higher priority thread. This is illustrated in the top of the image below:

In this image, we start with the low-priority thread acquiring a lock. Let’s follow through the timeline and see what happens when we have a priority inversion.

- At time A, a high-priority thread runs, and preempts the low-priority thread

- At time B, the high-priority thread needs to access the resources protected by the lock that the low priority thread has. Since the high-priority thread is blocked, the OS switches to running the low-priority thread again.

- At time C, we have a problem: a medium-priority task preempts the low-priority thread. This medium-priority task represents a task that may not be real-time, and can take an unbounded amount of time to complete.

For this situation, our high-priority, real-time thread is blocked because the low priority thread has the lock. The low-priority thread cannot run because it has been preempted by the medium-priority thread. Our real-time process is essentially blocked by a lesser-priority task that we may not even control! We must wait until this medium-priority task completes before the low-priority task can run again. Only then can the low-priority task finish with the lock, release it, and the high-priority thread can acquire the lock and complete its work.

Let’s look at an example from the ROSCon 2023 Real-Time Programming workshop repository.

In this example, we have a PID controller we’d like to run in real-time with a 1 kHz frequency. We’d like to expose a way to set the PID gains from another thread, and read them from the real-time thread every iteration. To do this, we have a Set() and a Get() method. To ensure that the PID constants are not being read while trying to write to them, these methods are protected by a lock. This lock uses a std::mutex, which leaves us vulnerable to priority inversion.

struct MultipleData {

void Set(PIDConstants pid_constants) {

const std::scoped_lock lock(pid_constant_mutex_);

pid_constants_ = pid_constants;

}

PIDConstants Get() {

const std::scoped_lock lock(pid_constant_mutex_);

return pid_constants_;

}

private:

// std::mutex does not support priority inheritance

using mutex = std::mutex;

mutex pid_constant_mutex_;

PIDConstants pid_constants_;

};

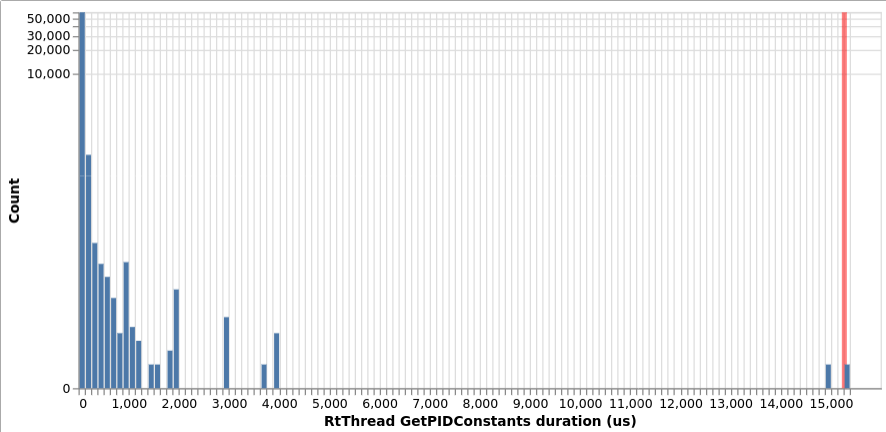

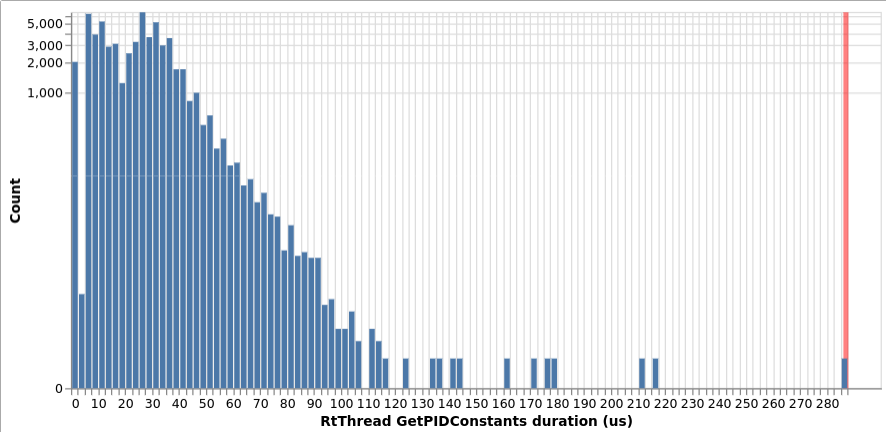

The following is an example trace from running the PID controller with the std::mutex.

The above diagram is a histogram of how long it took the real-time thread to get the PID constants. This is done every iteration. Since the PID controller is meant to run at 1 kHz, the real-time thread has 1 ms to run its control loop, which includes getting those PID constants. Most iterations were quite short, but for real-time, we are concerned about keeping the worst-case maximum latency bounded. In this case, the maximum latency was recorded as 15 296 µs, far greater than our 1 ms (1000 µs) deadline. Just by using a std::mutex, we have failed to meet the deadline for our controller!

When the other thread is trying to Set() the PID constants, the real-time thread is unable to Get() the PID constants. This is known as lock contention. However, accessing a variable in memory takes on the order of 100 ns (or faster if it’s in cache) [System Performance 2e by Brendan Gregg, Section 2, Table 2.2] so why did it take 15 296 µs for the real-time thread to finish? This is an example of priority inversion, and why we need to take precautions to prevent it.

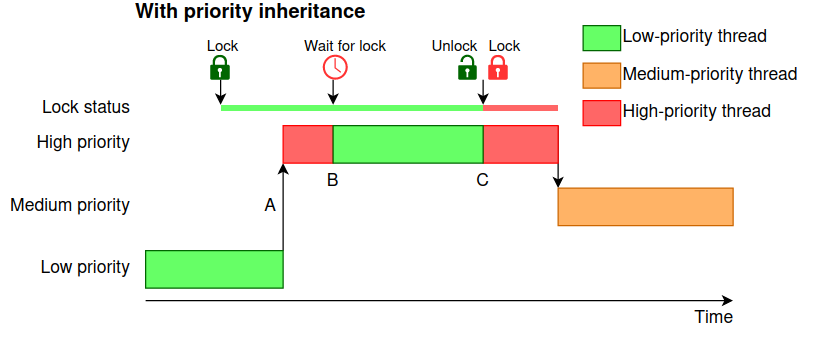

Now that we’ve seen the effects of priority inversion, how can we avoid it in our real-time applications? There are many different techniques, including a variety of lock-free algorithms. On Linux, we also have the option of using a mutex that supports priority inheritance. The diagram below shows what would happen in the same situation as above, except now with priority inheritance enabled.

This situation starts off similarly as before: the low-priority thread acquires the lock. Let’s follow through the timeline and see the difference that priority inheritance makes:

- At time A, the low-priority thread is preempted by a high-priority thread.

- The difference happens at time B, when the high-priority thread is blocked by the lock. With priority inheritance, while a low-priority thread holds a lock that is blocking a high-priority thread, the low-priority thread then inherits the priority of the high-priority thread. A medium-priority thread then will not be able to preempt the boosted low-priority thread.

- The boosted low-priority thread finishes with the lock at C, and the high-priority thread can then acquire the lock and run. Only then can the medium-priority thread run.

It’s important to note that using priority inheritance essentially includes part of the low-priority thread as real-time code. Any code protected by a priority inheritance mutex should be treated as real-time.

As mentioned earlier, std::mutex does not support priority inheritance, so we’ll have to use our own mutex implementation. You can enable priority inheritance by using the pthread_mutexattr_setprotocol function, as shown in the simplified code snippet below. A full example implementation can be found here.

pthread_mutex_t m;

pthread_mutexattr_t attr;

pthread_mutexattr_init(&attr);

pthread_mutexattr_setprotocol(&attr, PTHREAD_PRIO_INHERIT);

pthread_mutex_init(&m, &attr);

Now, let’s try running our PID controller again, except now using a priority inheritance mutex:

The maximum recorded latency was 290 µs, which is well within our 1000 µs deadline. By switching from a std::mutex to a priority inheritance mutex, we were able to ensure our real-time control loop was able to complete within the deadline! If required, it may be possible to achieve better performance by using techniques like lockless algorithms, but if that level of performance isn’t required, priority inheritance mutexes are a great tool for sharing data with a real-time thread.

Priority inheritance is only one of several issues that may cause latency in a real-time program. There are many other potential sources of latency, including hardware and scheduling latency. If you’d like to know more about real-time driver development services, you can read more here or contact us at hello@picknik.ai.